このブログは、拡張現実 及び 仮想現実 が使用された最新の情報と事例などを掲載しています。---This blog publishes latest information and the case where AR (Augmented Reality) and VR (Virtual Reality) are used, etc.

Profile

NAME:

Etsuji Kameyama (亀山悦治)

Profile:

拡張現実(AR)や仮想現実(VR)の技術は、BtoC・BtoBの分野での活用が始まっている。このブログではAR、NUI、各種センサーに関わる最新の事例や技術を中心に紹介。

ARやVRのシステムやソリューションの導入を検討されている方は、こちらか、私までご連絡ください。エンターテーメント分野、印刷分野、家具や機器の配置シミュレーション、操作支援、などへの技術選定、アプリケーション開発、運用、コンサルテーションに対応します。

-私が関係しているサイト

- twitter (@kurakura)

- facebook (ekameyama)

- LinkedIn (Etsuji Kameyama)

- ITmediaマーケティング

- SlideShare (ekame)

- paper.li (kurakura/ar)

- YouTube (ekame)

- myspace Music (KURA KURA)

- The 25 Most Tweeting About AR

- Twitter most popular

- AR Mind Map

- AMeeT-拡張現実の紹介(ニッシャ印刷文化振興財団)

- デジタルサイネージとAR(デジタルサイネージ総研)

- Capital newspaper

- Pingoo

- 話題沸騰のAR/VRがスマートワークを進化させる(スマートワーク総研)

インターネット学校「スクー」の90番目の講師

ARやVRのシステムやソリューションの導入を検討されている方は、こちらか、私までご連絡ください。エンターテーメント分野、印刷分野、家具や機器の配置シミュレーション、操作支援、などへの技術選定、アプリケーション開発、運用、コンサルテーションに対応します。

-私が関係しているサイト

- twitter (@kurakura)

- facebook (ekameyama)

- LinkedIn (Etsuji Kameyama)

- ITmediaマーケティング

- SlideShare (ekame)

- paper.li (kurakura/ar)

- YouTube (ekame)

- myspace Music (KURA KURA)

- The 25 Most Tweeting About AR

- Twitter most popular

- AR Mind Map

- AMeeT-拡張現実の紹介(ニッシャ印刷文化振興財団)

- デジタルサイネージとAR(デジタルサイネージ総研)

- Capital newspaper

- Pingoo

- 話題沸騰のAR/VRがスマートワークを進化させる(スマートワーク総研)

インターネット学校「スクー」の90番目の講師

AR, VR, MR + HMD, Smart Glass が生活とビジネスを変革

Ninja Search

Google Search

カスタム検索

Category

アーカイブ

Present number of visits

Counter

感覚デバイス開発

あらゆる産業において、様々な新規デバイス・システム開発や新規サービスを創り出すべく注目が集まっている。とくにセンサー素子開発やセンサ・センシングシステムなどの研究開発者の方、ロボット開発における感覚器代替分野の研究者の方、関連業界の方々へ。

よくわかるAR〈拡張現実〉入門

次世代のプロモーション手法として脚光を集めるほか、エンターテイメントやコミュニケーション、教育や医療のツールとして幅広い活用・発展が期待されているARの世界がよくわかる入門書が電子書籍で登場

Amazon検索

Amazon

USCスクールのシネマティック・アーツ・ワールド・ビルディング・メディアラボでは、インテルCES 2014益しビジョンで「リヴァイアサン」が展示されている。この実験的なストーリーテリングのプラットフォームは、エンターテイメント、物理世界と仮想世界の技術の力と柔軟性を介して接続中の新たな可能性を探ります。将来的には自宅でもこのような体験ができるようになるだろう。

[LAS VEGAS] [Jan. 6, 2014] – The USC School of Cinematic Arts World Building Media Lab is debuting “LEVIATHAN” at the Intel CES 2014 Exhibit. This experimental storytelling platform explores new possibilities in entertainment—where physical and virtual worlds connect through the power and flexibility of technology. Emerging between the console and the home theater, LEVIATHAN lives on the digital rim, where the robust storytelling of film and literature meets the physical interactivity of gaming. This hybrid storytelling platform draws inspiration from both narrative & gameplay and delivers engagements that are both physical & digital. This is a space where the author controls the story, and the audience plays inside that story. Using an Intel powered 2 in 1 as a portal, fans will play with “Huxleys” (genetically engineered flying jellyfish) and follow the Leviathan whale on her voyage through CES.(via http://digitalrim.org/press/)

PR

The case was using a VR headset Oculus Rift, a system that can and shaking hands with virtual idol is realized, but it seems she can to such a thing. University of Tsukuba seems to be developing.

Connect the Oculus Rift and personal computer, to be delivered from the PC content. Is displayed as seen from above and facing up and lying down. I look girl separate as has been lying from side to side with a facing side. Those that come to talk or laugh.

It's pretty geeky, but the feeling that fashion is likely to be.

VRヘッドセットOculus Rift(オキュラスリフト)を使用た事例としては、バーチャルアイドルと握手などができるシステムが実現されているが、こんなことまで出来てしまうようだ。筑波大学が開発しているようだ。パソコンと、Oculus Riftを接続し、コンテンツはパソコンから配信する。寝転んで上を向くと上から見られているように表示され、横を向くと左右に別々の女の子が添い寝しているように見え、話しかけてくるというもの。これは、かなりやばい感じだが、流行りそうな予感がする。

(via 痛いニュース)

実際にぬり絵をした洋服を、スマホ専用のアプリを起動しカメラでかざすと、ファッショモデルが実際に着てファッションショーを繰り広げる。認識エリアは前面、背面のページの3つの角に印刷されている四角のマーカーという方式。2回認識させてからの処理となっているため女の子が実際に遊ぶときはコツが必要になりそう。やはり、直観的に画像にかざすだけの操作が良いだろう。

(more http://www.techlicious.com/blog/the-future-of-toys-is-augmented-reality/)

Design your own clothing, then put it on display in your own fashion show! Create magazine covers featuring your latest styles and share with friends, with My Virtual Fashion Show from Crayola!(via http://www.youtube.com/watch?v=CLbasMYkiKA#t=30)

(more http://www.techlicious.com/blog/the-future-of-toys-is-augmented-reality/)

MWC2014でmetaio社が公開したkinectを使用した顔認識のプロトタイプ。実用レベルに達するまではもう少しかもしれないが、期待したい技術だ。

The demo we experienced at Mobile World Congress was just a prototype of what the feature will be like when it debuts in Metaio’s SDK, but it was accurate enough to show how it would work. During our hands-on session, we turned our head from leftto right so that the Kinect could read the front and sides of our face. We had to do this very slowly, however, so that the camera could get the most accurate reading.(via http://www.tomsguide.com/us/metaio-augmented-reality-mwc-2014,news-18372.html)

2014年はたくさんのHMDがリリースされそうだ。ソニーからもコンセプトモデルとして両眼タイプのSmartEyeglassが公開された。

Sony’s wearable tech gained some much needed definition at MWC this week, but it’s still clearly an unfinished concept. There are a number of companies currently chasing the dream of getting users to wear computers on their face for extended periods of time. Google is one of the first to make their project both publicly known and actually practical to wear all day, so there have been several attempts to follow suit with designs that are more stylish or more featureful.(via http://www.geek.com/android/sonys-smarteyeglass-concept-predicts-a-text-driven-augmented-reality-future-1585649/)

Sony’s SmartEyeglasses is neither of those things, but it does allow for binocular vision thatGoogle Glass does not which means the potential for augmented reality services are much higher.

3月8日(土)より全国で公開される、映画『偉大なる、しゅららぼん』(出演:濱田岳、岡田将生、深田恭子 他)のキャンペーンで、ARを使用したキャンペーンが実施される。

キャンペーン企画概要 映画公開を記念して全国のパワースポットで登場人物たちと記念写真を撮ることができます。 さらに撮影した写真をTwitterに投稿することで、抽選に応募可能です。当選者には映画「偉大なる、しゅららぼん」のレアなグッズがプレゼントされる。

■映画『偉大なるしゅららぼん』【公式ページ】

http://shurara-bon.com/

■LIVE SCOPAR【公式ページ】

このキャンペーンでは、レイ・フロンティア株式会社のARアプリ「LIVE SCOPAR(ライブスコーパー)」が、採用されている。

http://livescopar.com/

キャンペーン企画概要 映画公開を記念して全国のパワースポットで登場人物たちと記念写真を撮ることができます。 さらに撮影した写真をTwitterに投稿することで、抽選に応募可能です。当選者には映画「偉大なる、しゅららぼん」のレアなグッズがプレゼントされる。

http://shurara-bon.com/

■LIVE SCOPAR【公式ページ】

このキャンペーンでは、レイ・フロンティア株式会社のARアプリ「LIVE SCOPAR(ライブスコーパー)」が、採用されている。

http://livescopar.com/

Future Predicting App Concept by Dor Tal from Dezeen on Vimeo.

Israeli designer Dor Tal’s Future Control project uses data generated on social networks to help users predict the future and take action ahead of time. This personal horoscope built on your data could be used for everything from when you're most likely to go to the gym, to predicting what mood your partner is going to be in when they get home. Tal's concept works in two ways. The first requires the user to download an app on to their smartphone that scours their social networks and any data generated about user. An algorithm based in a cloud system of servers then crunches through the numbers to detect any patterns of behaviour that could be forecast ahead of time. The more accounts the user adds, including credit card information, Google, Apple and Facebook, the more intelligent it becomes.(via https://vimeo.com/87299033#embed)

家具やシステムキッチンの配置を購入前に試すことが出来ると、とても便利だろう。

(via http://www.youtube.com/watch?v=_JS4zhI1erQ&feature=youtube_gdata)

Türkiye Mobilya Sektörü yeni bir teknolojiyle tanışıyor.Size mobilya sektöründe devrim yaratacak yeni bir satış mecrasından bahsedeceğiz.Ürünün gerçeğini üretmeden ya da hizmeti henüz sunmadan dijital deneyim yoluyla vaadinizi ya da faydanızı tüketiciye gerçekten yaşatabilirsiniz.Mobilya artırılmış gerçeklik çalışmaları.Koltuk giydirme programı.mobilya artırılmış gerçeklik.Mobilya çizim programı

(via http://www.youtube.com/watch?v=_JS4zhI1erQ&feature=youtube_gdata)

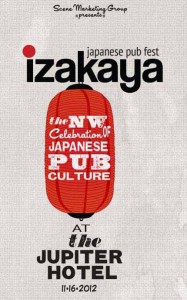

Lumenal Code painted this interactive chalk mural for Izakaya 2012, a celebration of Japanese Pub Culture held at the Jupiter Hotel in Portland, Oregon on Friday, November 16th. We activated the chalk mural using Layar. Scanning the seven panels of the mural brought them to life with an animated version of the story, our take on the classic monster vs. robot battles. At the beginning and end of the mural we had links to share the project on Twitter and Facebook, as well as a link to our website.

Lumenal Code painted this interactive chalk mural for Izakaya 2012, a celebration of Japanese Pub Culture held at the Jupiter Hotel in Portland, Oregon on Friday, November 16th. We activated the chalk mural using Layar. Scanning the seven panels of the mural brought them to life with an animated version of the story, our take on the classic monster vs. robot battles. At the beginning and end of the mural we had links to share the project on Twitter and Facebook, as well as a link to our website.How We Did it:

Our process involved a couple days of concept sketching, where Dan and I bounced ideas back and forth and came up with the theme and layout for our mural. We also designed our two characters, the Samurai Robot and the Monster. Then we spent a day working from our sketches to create our final digital image. This image served as our base for the animations and the chalk mural.

(via http://www.augmentedart.com/projects/izakaya-chalk-mural/)

ファッションショーでもウェアラブルテクノロジーを表現しユニークな効果を生み出している。視覚的な演出はもとより、背景に流れるミニマル的な音楽のセンスが素晴らしい。心地良い。

Fashion House CuteCircuit Debuts Wearable Technology Collection At NYFW With Apparel Controlled By iPhone App

Fashion House CuteCircuit Debuts Wearable Technology Collection At NYFW With Apparel Controlled By iPhone App

Fashion house CuteCircuit debuted their latest wearable technology collection during NYFW 2014 with a line of styles controlled by an iPhone app. The futuristic fashions of CuteCircuit's haute-tech collection are reminiscent of a modern day chameleon, using the iPhone app to change attire's colors and appearance. Despite the garment's high-tech features, CuteCircuit designers Ryan Genz and Francesca Rosella upheld a high-fashion aesthetic. Simply put, people will actually want to wear these wearables.

The full show! Complete recording of the landmark New York Fashion Week debut of the interactive fashion label CuteCircuit. The show brings for the first time to a major international fashion week fashions that include advanced wearable technology, seamlessly integrated in beautiful couture and demi-couture ready-to-wear. For the first time in fashion's history the models control what their dresses will look like on the runway through their mobile phones.

ARプラットフォームの老舗 Wikitudeの画像認識技術とグーグル・グラスの組み合わせによって、本を読みながら関連情報を直ぐに見ることができる。

Have you ever read a book wondering what the events described were actually like? With the power of Wikitude's image recognition engine and Google Glass, you no longer have to wonder. The Wikitude SDK has been optimized to power incredible experiences on Google Glass. This demonstration video is just one example of what can be done with Wikitude on Glass.Get started developing with Wikitude for Glass today with our optimized SDK at: http://www.wikitude.com/glass(via http://www.youtube.com/watch?v=JcXfvNihmFg&feature=youtube_gdata)

Buried pipes that can not see that it does not want to dig, such as sewer and water pipes, on the ground a lot. When construction or repair, it is necessary to know exactly what these. By using the techniques of AR, it's possible to resolve.

水道管や下水管など、地面には掘り出してみないと見ることができない埋設管がたくさん。補修や工事の際は、これらを正確に把握する必要がある。ARの技術を使用することにより、解決が可能だ。

(via http://www.youtube.com/watch?v=ZeecbYwawr0&feature=youtube_gdata)

水道管や下水管など、地面には掘り出してみないと見ることができない埋設管がたくさん。補修や工事の際は、これらを正確に把握する必要がある。ARの技術を使用することにより、解決が可能だ。

(via http://www.youtube.com/watch?v=ZeecbYwawr0&feature=youtube_gdata)

ヘルメットタイプのウェアラブル機器は、兵士向けなどに既に用いられている。Google Glassは、例えばビデオで紹介されている火星砂漠研究基地などでも活用される場面がでてくることであろう。

Augmented reality helmet for soldiers exceeds expectations more ...http://eandt.theiet.org/news/2014/feb/q-warrior.cfm

A crew at the Mars Desert Research Station has tested Google Glass integrated into a space suit helmet to improve astronauts' navigation and communication. Check what they say about their experience(via http://www.youtube.com/watch?v=i1ARzGUFkJE#t=199)

Augmented reality helmet for soldiers exceeds expectations more ...http://eandt.theiet.org/news/2014/feb/q-warrior.cfm

拡張現実としては、目で見えるものが目立っているが、味覚や聴覚、嗅覚への取り組みも始まっている。ここでは、人間の味覚を感覚的に「だます」ことができるかという実験といえる。

http://storiesbywilliams.files.wordpress.com/2014/02/digital_taste_interface1.png?w=396&h=237

http://storiesbywilliams.files.wordpress.com/2014/02/digital_taste_interface1.png?w=396&h=237

(via http://storiesbywilliams.com/2014/02/20/the-future-is-here-vr-taste-buds-and-google-nose/)

http://storiesbywilliams.files.wordpress.com/2014/02/digital_taste_interface1.png?w=396&h=237

http://storiesbywilliams.files.wordpress.com/2014/02/digital_taste_interface1.png?w=396&h=237(via http://storiesbywilliams.com/2014/02/20/the-future-is-here-vr-taste-buds-and-google-nose/)

まるで、映画「トータル・リコール」や「バニラ・スカイ」を彷彿とさせる仮想世界の体験装置。このような感覚を拡張する装置は登場するのだろうか?

Remy Cayuela made a nice video of the clip “I got U” from Duke Dumont, representing a young guy experimenting, through an augmented reality helmet, the life that every man would ever want…

(via http://www.neversaymedia.com/blog/2014/02/21/the-helmet-that-gives-you-the-life-youd-ever-want/)

Remy Cayuela made a nice video of the clip “I got U” from Duke Dumont, representing a young guy experimenting, through an augmented reality helmet, the life that every man would ever want…

(via http://www.neversaymedia.com/blog/2014/02/21/the-helmet-that-gives-you-the-life-youd-ever-want/)

Duke Dumont - I got u from Remy Cayuela on Vimeo.

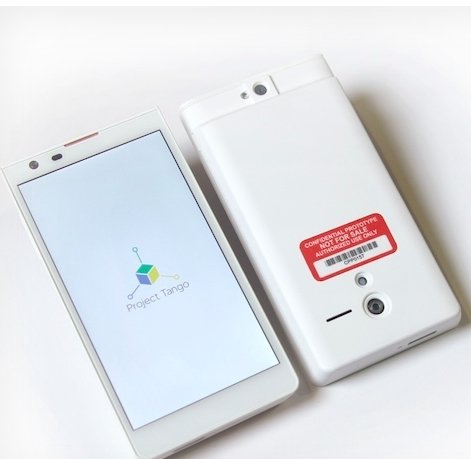

Googleは、「プロジェク・トタンゴ」を立ち上げた。これは、屋内と屋外の環境をマッピングすることが可能な3Dセンサーを搭載した実験スマートフォンや開発キット。2014年2月20日頃から世界中が、この話題でもちきりだ。

(article from Japan http://appllio.com/20140222-4898-google-project-tango-3d-mapping-movie)

Google today launched Project Tango, an experimental smartphone and developer kit that incorporates 3D sensors able to map indoor and outdoor environments. Designed as a 5-inch phone containing custom hardware and software, the first Project Tango prototype makes more than a quarter million 3D measurements each second, tracking three-dimensional motion to create a visual 3D map of the space around itself. Google describes Project Tango primarily as a mapping tool, automatically capturing the world around each user to provide directions, dimensions, and environmental maps.

What if you could capture the dimensions of your home simply by walking around with your phone before you went furniture shopping? What if directions to a new location didn't stop at the street address? What if you never again found yourself lost in a new building? What if the visually-impaired could navigate unassisted in unfamiliar indoor places? What if you could search for a product and see where the exact shelf is located in a super-store?

The company also pictures Project Tango as the first step towards fully immersive augmented reality games that merge gameplay with real world locations.

Imagine playing hide-and-seek in your house with your favorite game character, or transforming the hallways into a tree-lined path. Imagine competing against a friend for control over territories in your home with your own miniature army, or hiding secret virtual treasures in physical places around the world?

According to TechCrunch, Project Tango utilizes a vision processor called the Myriad 1, from Movidius, which is incredibly power efficient compared to other 3D-sensing chips on the market. The power necessary for 3D chips to function has thus far been one of the major issues preventing the technology from being incorporated into a smartphone, but because it functions like a co-processor much like Apple's own M7 motion co-processor, it is able to draw less power.(via http://www.macrumors.com/2014/02/20/google-project-tango/)

(article from Japan http://appllio.com/20140222-4898-google-project-tango-3d-mapping-movie)

キューブを認識するARアプリ。箱の側面を認識し、箱の中にあたかもクッキーマンやレゴマンが生きているように動く様子が見られる。不思議な感覚になる。(箱の中にアイテムを入れる行為はARとは関係していないと思われる)

Qbox is an educational and educational protype for children consisting of a little cube robot, a toy figure and a mobile device application. The kid can interact with the cube trough the augmented reality displayed on the device. The kid has to introduce a little figure into the cube. When the AR is displayed the character will appear inside the cube and will become alive. The kid will be able to see the character inside the cube and interact with it. Any type of application can be developed using this new way of interaction. A new relation is created between the kid and the character.(via http://www.youtube.com/watch?v=OCC5CshnC10&feature=youtube_gdata)

ユーザー参加型の学会として発足し、毎回数万人規模の視聴者を集める「ニコニコ学会β」。2013年12月21日、ニコファーレで行われた第5回シンポジウムでは、5つのセッションが行われた。慶應義塾大学の稲見先生が座長を務め、全体のハイライトになった人間の感覚に注目して発表する「研究100連発」を紹介する。(via http://www.atmarkit.co.jp/ait/articles/1402/14/news001.html)

3人目の発表者は稲見座長自ら「稲見よりもマッドな研究をしている」と紹介された藤井直敬氏(理化学研究所 脳科学総合研究センター チームリーダー)。 知覚、脳科学についての研究を行っている。「脳をハックすることで新たなリアリティを紡ぐ」と紹介された研究は、脳と感覚の話が中心になった。 眼科医としてのキャリアスタートから、視覚が脳でどう動いているかに関する研究に話は進む。サルの脳に大量の電極を刺したときの、視覚と身体情報の違いについて検証する研究だ。脳のさまざまな部位に200本もの電極を刺して検証を進めたという。研究に十分なデータを取るだけで2年ほどの日数を費やしたという。

Fujitsu Laboratories announces a wearable device of a unique glove type.

富士通研究所が、ユニークなグローブ型のウェアラブルデバイスを発表。

富士通研究所が、ユニークなグローブ型のウェアラブルデバイスを発表。

Press Release 2014年2月18日http://pr.fujitsu.com/jp/news/2014/02...富士通研究所は、保守作業などの現場向けにNFC(Near Field Communication)タグリーダとジェスチャ入力機能を備えたグローブ型ウェアラブルデバイスを開発しました。2月24日から27日までスペインのバルセロナで開

催される国際展示会「Mobile World Congress 2014 (MWC2014)」にて展示します。Press Release February 18, 2014http://www.fujitsu.com/global/news/pr...Fujitsu Laboratories Ltd. announced that it has developed a wearable device in the form of a glove, equipped with a Near Field Communication (NFC) tag reader and that features gesture-based input for maintenance and other on-site operations.(via http://www.youtube.com/watch?v=BefBWuap3x4&feature=youtube_gdata)

This glove device will be exhibited at Mobile World Congress 2014, running February 24-27 in Barcelona, Spain.

Copyright © Augmented Reality & Virtual Reality World | 拡張現実と仮想現実の世界 All Rights Reserved